Tweet NLP |

|

Tweet NLP |

|

We provide a tokenizer, a part-of-speech tagger, hierarchical word clusters, and a dependency parser for tweets, along with annotated corpora and web-based annotation tools.

Contributors: Archna Bhatia, Dipanjan Das, Chris Dyer, Jacob Eisenstein, Jeffrey Flanigan, Kevin Gimpel, Michael Heilman, Lingpeng Kong, Daniel Mills, Brendan O'Connor, Olutobi Owoputi, Nathan Schneider, Noah Smith, Swabha Swayamdipta and Dani Yogatama.

We provide a fast and robust Java-based tokenizer and part-of-speech tagger for tweets, its training data of manually labeled POS annotated tweets, a web-based annotation tool, and hierarchical word clusters from unlabeled tweets.

These were created by Olutobi Owoputi, Brendan O'Connor, Kevin Gimpel, Nathan Schneider, Chris Dyer, Dipanjan Das, Daniel Mills, Jacob Eisenstein, Michael Heilman, Dani Yogatama, Jeffrey Flanigan, and Noah Smith.

./runTagger.sh --output-format conll examples/casual.txtThese are real tweets.

ikr smh he asked fir yo last name so he can add u on fb lololol word tag confidence ikr ! 0.8143 smh G 0.9406 he O 0.9963 asked V 0.9979 fir P 0.5545 yo D 0.6272 last A 0.9871 name N 0.9998 so P 0.9838 he O 0.9981 can V 0.9997 add V 0.9997 u O 0.9978 on P 0.9426 fb ^ 0.9453 lololol ! 0.9664

|

:o :/ :'( >:o (: :) >.< XD -__- o.O ;D :-) @_@ :P 8D :1 >:( :D =| ") :> .... word tag confidence :o E 0.9387 :/ E 0.9983 :'( E 0.9975 >:o E 0.9964 (: E 0.9994 :) E 0.9997 >.< E 0.9952 XD E 0.9938 -__- E 0.9956 o.O E 0.9899 ;D E 0.9995 :-) E 0.9992 @_@ E 0.9964 :P E 0.9996 8D E 0.9961 : E 0.6925 1 $ 0.9194 >:( E 0.9715 :D E 0.9996 =| E 0.9963 " , 0.6125 ) , 0.9078 : , 0.7460 > G 0.7490 ... , 0.5223 . , 0.9946Challenge case for emoticon segmentation/recognition: 20/26 precision, 18/21 recall. |

We provide a dependency parser for English tweets, TweeboParser . The parser is trained on a subset of a new labeled corpus for 929 tweets (12,318 tokens) drawn from the POS-tagged tweet corpus of Owoputi et al. (2013) , Tweebank .

These were created by Lingpeng Kong, Nathan Schneider, Swabha Swayamdipta, Archna Bhatia, Chris Dyer, and Noah A. Smith.

Thanks to Tweebank annotators: Waleed Ammar, Jason Baldridge, David Bamman, Dallas Card, Shay Cohen, Jesse Dodge, Jeffrey Flanigan, Dan Garrette, Lori Levin, Wang Ling, Bill McDowell, Michael Mordowanec, Brendan O’Connor, Rohan Ramanath, Yanchuan Sim, Liang Sun, Sam Thomson, and Dani Yogatama.

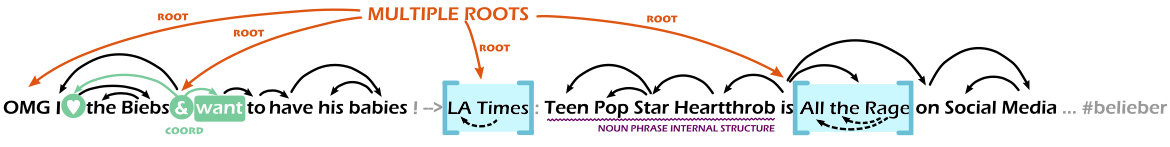

Given a tweet, TweeboParser predicts its syntactic structure, represented by unlabeled dependencies. Since a tweet often contains more than one utterance, the output of TweeboParser will often be a multi-rooted graph over the tweet. Also, many elements in tweets have no syntactic function. These include, in many cases, hashtags, URLs, and emoticons. TweeboParser tries to exclude these tokens from the parse tree (grayed out in the example below).

Please refer to the paper for more information.

An example of a dependency parse of a tweet is:

Corresponding CoNLL format representation of the dependency tree above:

1 OMG _ ! ! _ 0 _ 2 I _ O O _ 6 _ 3 ♥ _ V V _ 6 CONJ 4 the _ D D _ 5 _ 5 Biebs _ N N _ 3 _ 6 & _ & & _ 0 _ 7 want _ V V _ 6 CONJ 8 to _ P P _ 7 _ 9 have _ V V _ 8 _ 10 his _ D D _ 11 _ 11 babies _ N N _ 9 _ 12 ! _ , , _ -1 _ 13 —> _ G G _ -1 _ 14 LA _ ^ ^ _ 15 MWE 15 Times _ ^ ^ _ 0 _ 16 : _ , , _ -1 _ 17 Teen _ ^ ^ _ 19 _ 18 Pop _ ^ ^ _ 19 _ 19 Star _ ^ ^ _ 20 _ 20 Heartthrob _ ^ ^ _ 21 _ 21 is _ V V _ 0 _ 22 All _ X X _ 24 MWE 23 the _ D D _ 24 MWE 24 Rage _ N N _ 21 _ 25 on _ P P _ 21 _ 26 Social _ ^ ^ _ 27 _ 27 Media _ ^ ^ _ 25 _ 28 … _ , , _ -1 _ 29 #belieber _ # # _ -1 _

--model MODELFILENAME

To receive announcements about updates, join the ARK-tools mailing list.

Here is an HTML viewer of the word clusters. Produced by an unsupervised HMM: Percy Liang's Brown clustering implementation on Lui and Baldwin's langid.py-identified English tweets; see Owoputi et al. (2012) for details.

We recommend the largest one:

| filename | #wordtypes | #tweets | #tokens | #clusters | min count | tweet source |

|---|---|---|---|---|---|---|

| 50mpaths2 | 216,856 | 56,345,753 | 847,372,038 | 1000 | 40 | 100k tweet/day sample, 9/10/08 to 8/14/12 |

Also, here are the smaller ones used in the experiments.